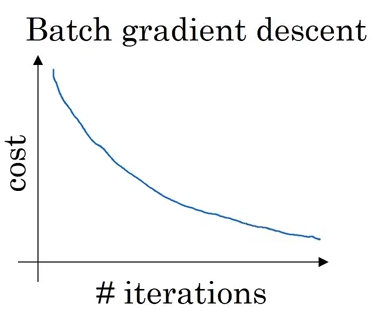

To wrap your head around it, consider the following figure. The gradient, the higher dimensional cousin of derivative, gives us the direction with the steepest ascent. This direction is given by the direction exactly opposite to the direction of the gradient. This is the direction we have to move in. The first thing we do is to check, out of all possible directions in the x-y plane, moving along which direction brings about the steepest decline in the value of the loss function. When we initialize our weights, we are at point A in the loss landscape. We need to find a way to somehow navigate to the bottom of the "valley" to point B, where the loss function has a minima? So how do we do that? Such a situation correspond to point A on the contour, where the network is performing badly and consequently the loss is high. You have randomly initialized weights in the beginning, so your neural network is probably behaving like a drunk version of yourself, classifying images of cats as humans. Such a point is called a minima for the loss function. Our goal is to find the particular value of weight for which the loss is minimum. The z axis represents the value of the loss function for a particular value of two weights. The x and y axes represent the values of the two weights. However, it still serves as a decent pedagogical tool to get some of the most important ideas about gradient descent across the board. Why do I say a very nice loss function? Because a loss function having a contour like above is like Santa, it doesn't exist. Now, the countour of a very nice loss function may look like this.

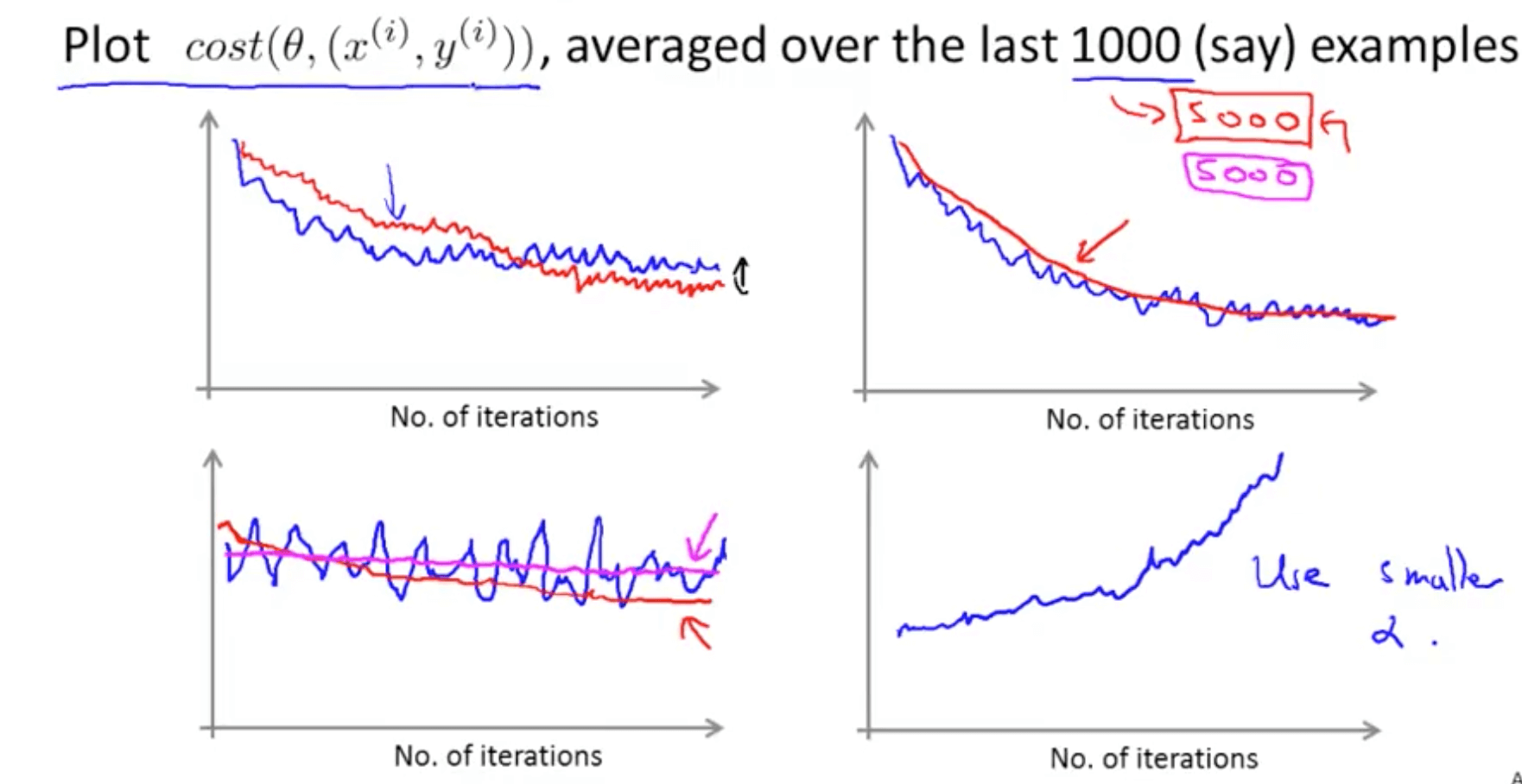

In practice, this number would be around a billion, but we'll still stick to the two parameter example throughout the post so as not drive ourselves nuts while trying to visualise things. Let us, for sake of simplicity, let us assume our network has only two parameters. The value of this loss function gives us a measure how far from perfect is the performance of our network on a given dataset. AlexNet is a mathematical function that takes an array representing RGB values of an image, and produces the output as a bunch of class scores.īy training neural networks, we essentially mean we are minimising a loss function. Consider the task of image classification. A Neural Network is merely a very complicated function, consisting of millions of parameters, that represents a mathematical solution to a problem. Mini batch gradient descent works well with large training examples in reduced number of iterations.Deep Learning, to a large extent, is really about solving massive nasty optimization problems. If the number of training examples is high, data is processed in batches, where every batch would contain ‘b’ training examples in one iteration. The value ‘m’ refers to the total number of training examples in the dataset.The value ‘b’ is a value less than ‘m’. Here, ‘b’ number of examples are processed in every iteration, where b Next, the weights are updated to ensure that the error is minimized. Once the parameters are assigned coefficients, the error or loss is calculated. To implement this, a set of parameters are defined, and they need to be minimized. Mathematically speaking, the local minimum of a function is obtained. The idea behind using gradient descent is to minimize the loss when in various machine learning algorithms.

0 kommentar(er)

0 kommentar(er)